- Introduction to Deep Learning

- Data Preprocessing for Deep Learning

- Convolutional Neural Networks

- Recurrent Neural Networks (RNNs)

- Long Short Term Memory (LSTM) Networks

- Transformers

- Generative Adversarial Networks

- Autoencoder

- Variational Autoencoders

- Diffusion Architecture

- Reinforcement Learning in Deep Learning

- Optimization Algorithms for Deep Learning

- Regularization Techniques

- Model Tracking and Accuracy Analysis

- Hyperparameter Tuning Techniques

- Transfer Learning

- Deployment of Deep Learning Models with REST API

- Deep Learning on Cloud Platforms

- Mathematical Foundations for Deep Learning

Deployment of Deep Learning Models with REST API | Deep Learning

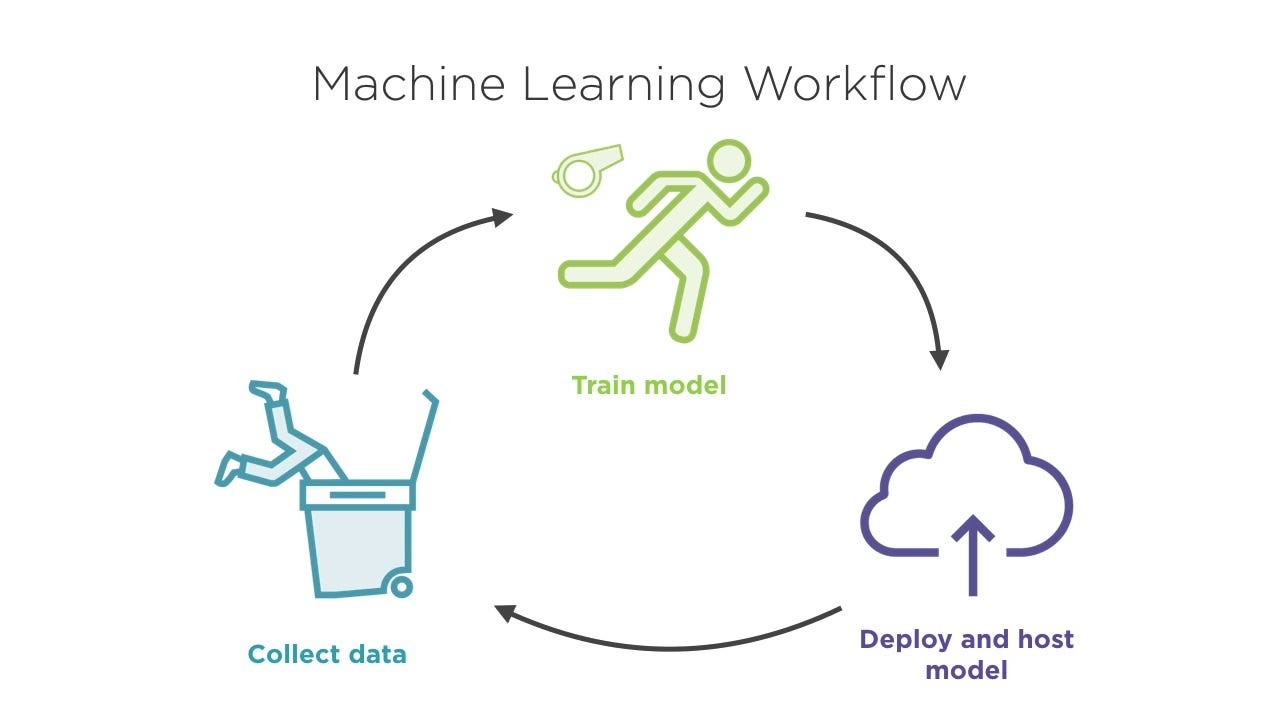

The Machine learning model deployment process involves making a model trained on a dataset accessible in systems or applications used in the real world. This usually entails establishing the required hardware, such as servers or cloud platforms, and creating the software environment to host and run the model. During deployment, the trained model is integrated into a system or application to process input data using the trained model and generate the required output or predictions. The model can be available as an API (Application Programming Interface) that other software components can access or be integrated directly into an application. There are several ways to deploy machine learning models, such as local, Cloud, and API.

Importance of Deploying Deep Learning Models as REST APIs

An architectural design approach called REST API (Representational State Transfer) makes it easier to create networked applications. REST facilitates the development of scalable, dependable, and stateless web services by conforming to rules and limitations. These APIs communicate with resources that have a URL by using the HTTP protocol and its methods (GET, POST, PUT, and DELETE).

A REST API's guiding principles include several essential topics. It uses a client-server architecture to communicate among various entities over a network. Servers reply to requests made by clients by providing the requested resources or actions.

Benefits of using REST APIs for model deployment

REST APIs used for model deployment offer several benefits. Some of them are:

- Scalability: REST APIs provide scalable deployment options for deep learning models. Many clients can use the API simultaneously because the models are hosted as APIs on server infrastructure. The models can support an extensive user base or several apps without being restricted by the capabilities of a single machine, thanks to the server's fast handling of these incoming requests in a scalable and distributed manner. The models can efficiently handle increasing workloads and service an increasing number of customers because of their scalability.

- Flexibility: REST APIs offer the essential flexibility to meet the unique requirements of various applications by returning results in various formats, such as JSON or binary data. This adaptability in handling input and output formats improves deep learning models' interoperability and makes integrating them into different software ecosystems easier.

- Security and access control: REST APIs allow the implementation of security controls to safeguard the deployed models. Through authentication and permission processes, access to the API endpoints can be restricted. By limiting access to authorized users or clients, you can make sure that only authenticated and permitted requests are handled. The data sent between the client and the server can also be protected using encryption and other security procedures.

- Monitoring and analytics: REST APIs are powerful for tracking and examining the deployed models' operation. The different facets of model deployment by recording and logging API queries and responses.

- Versioning and Deployment Flexibility: Versioning is supported using REST APIs, allowing for seamless updates and modifications to the models without interfering with the operations of current clients. Iteratively enhance the models and add new capabilities by releasing new API versions while keeping backward compatibility without needing users to update their integration code instantly. The deployment procedure is made more straightforward, and this flexibility improves the user experience.

- Flexibility for Front-end Development: REST APIs offer a definite division between the front-end client application and the back-end model servicing. Because of this division, front-end developers can concentrate on creating user interfaces and experiences that are intuitive rather than having to understand the underlying models or machine learning methods fully. The API links the client and the models, which can be considered a "black box."

Process of Deploying Deep Learning Models with REST APIs

The process of deploying deep learning models with REST APIs includes several steps. Here's a broad discussion overview of the process:

Step 1. Train and save the Deep Learning Model

Deep learning model must go through numerous steps to train and save. The first step is to compile a suitable dataset, which is then cleaned, normalized, resized, and split. The problem, data, and resources are used to choose a suitable deep-learning architecture.

The trained model's weights and architecture are then saved in a format suitable for deployment and inference, such as TensorFlow's SavedModel format, PyTorch's.pt files, or the ONNX format. After evaluating the model's performance on a validation dataset and tuning hyperparameters.

Step 2. Choose a Framework for Serving the Model

Several well-known frameworks are available for delivering deep learning models using REST APIs, each with benefits and considerations. Some frequently used frameworks are listed below:

- TensorFlow Serving- created with this purpose in mind, It offers a versatile and effective method for deploying models in real-world settings. TensorFlow Serving supports model versioning, simple scaling, and system integration.

- Flask- It is a popular option for creating REST APIs since it is incredibly flexible and simple. Flask permits smooth interaction with numerous deep-learning packages, enabling flexibility in constructing API endpoints.

- Django- Django is a powerful and feature-rich web framework. Even though it offers more functionality and structure than Flask, it could need more initial setup and configuration. Large-scale applications that require more functionality than just providing deep learning models are ideally suited for Django.

- FastAPI- It offers exceptional speed through asynchronous request processing and automatic API documentation generation. FastAPI is renowned for its versatility with different deep-learning libraries, scalability, and simplicity.

When choosing a framework, consider features like performance, scalability, community support, and integration possibilities with your preferred deep-learning library. Additionally, Consider the objectives of the particular project and select the framework that best satisfies demands for deployment flexibility, development speed, and maintainability.

Step 3. Prepare the Deployment Environment

Please set up a server or cloud instance to host the deep learning model and make it reachable over a REST API to deploy it. Choose a server or cloud provider that meets the needs of the deployment.

A cloud platform like Amazon Web Services (AWS), Google Cloud Platform (GCP), Microsoft Azure, a virtual private server (VPS), or a dedicated server might all be used. You can install all necessary dependencies on the server or cloud instance to set up the environment. Installing the deep learning framework (such as TensorFlow or PyTorch) and any other libraries the model needs is part of this process. Please look at the installation guidelines provided by the framework and libraries you selected.

Could you set up a web server like Nginx or Apache to manage incoming HTTP requests if needed? Depending on the particular demands of your deployment.

Step 4. Implement the REST API

Make a model inference endpoint to receive HTTP queries in the preferred framework. This endpoint should accept the input data, which should load the previously saved model and carry out the inference if necessary. The client may receive the output in response.

Step 5. Handle Input Data

The input data may need to be preprocessed before being fed into the model, depending on the requirements of the model. This may entail resizing photos, normalizing values, or changing the data format. API endpoints should incorporate the required preparation processes.

Step 6. Perform Model Inference

Use the loaded deep learning model to execute inference on the preprocessed input data by loading it into memory. Give the model the input data to get the output results or predictions.

Building a Flask Web Server

Flask is a fantastic option for both novice and seasoned developers because of its famed simplicity, flexibility, and ease of usage. Flask offers a versatile and straightforward approach to building web apps with its basic design and simplicity. It provides crucial capabilities like routing, templating, and request/response handling. You will get the code in Google Colab also.

Step 1: Create a Virtual Environment

python3 -m venv myenv

The "myenv" virtual environment is created in the current directory using this command.

For Windows:

myenv\Scripts\activate

For Linux or Mac:

source myenv/bin/activate

Step 2: Install Flask

Run the subsequent command to install Flask with the virtual environment activated.

pip install flask

This command installs flask.

Step 3: Verify the Installation

Let's build a straightforward "Hello, World!" application to ensure Flask is appropriately installed.

Create a new Python file in the project directory called app.py (or any other name you like).

Add the following code to app.py in a text editor by opening it:

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello():

return 'Hello, World!'

if __name__ == '__main__':

app.run()

Save the file.

In your command prompt or terminal, navigate to the directory where app.py is located and run this command

python app.py

If everything is correct, work. Display will show

Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)

Open the browser and hit this URL. The display will show Hello, World! Now Flask installs successfully.

Step 4: Import Flask and other required libraries

Import Flask and any additional libraries you'll need to manage HTTP requests, responses, and the deep learning model into a new Python file, such as app.py.

from flask import Flask, request, jsonify

import tensorflow as tf

Step 5: Create the Flask application instance

Initialize the Flask application by creating an instance of the Flask class. Provide __name__ as the argument, representing the current module's name. It is needed for Flask to locate resources like templates and static files. Add any configuration options as required.

app = Flask(__name__)

Step 6: Define the endpoints and routes

To provide routes and the endpoints they correspond to, use the @app.route() decorator. Routes determine the URL paths at which the program will respond to HTTP requests. You may manage the requests and produce the necessary responses within each endpoint.

@app.route('/predict', methods=['POST'])

def predict():

# Get data from the request

data = request.json

# Preprocess data if needed

# Use the deep learning model to make predictions

predictions = model.predict(data)

# Process the predictions if needed

# Return the response as JSON

return jsonify({'predictions': predictions})

The predicted route in this example is defined with the POST method, indicating that it anticipates receiving data in the request body. Predictions are made using the deep learning model by the predict() function, which then analyses the incoming data and returns the results as a JSON response.

Step 5: Load the deep learning model

Load the deep learning model into memory before the Flask app begins processing queries. This can be done in your app.py file's global scope to ensure the model is reachable from the endpoints. For example :

model = tf.keras.models.load_model('path/to/model.h5')

Step 7: Incorporate the deep learning model

Bring in any required preprocessing steps along with your trained deep-learning model.

Make predictions or perform additional operations using the model built into your route functions. For instance, if preprocessing is needed for your model:

import numpy as np

@app.route('/predict', methods=['POST'])

def predict():

data = request.get_json()

input_data = preprocess(data)

result = your_model.predict(input_data)

output = postprocess(result)

return jsonify(output)

Step 8: Run the Flask Application

if __name__ == '__main__':

app.run()

Your Flask application can use the request object to obtain the client's data to get input via the REST API. Here is an illustration of how you can use it.

The rising usage of containerization technologies like Docker and Kubernetes for more straightforward deployment and management across platforms is one of the future trends in deep learning model deployment with REST APIs. Real-time and low-latency applications are increasing the adoption of edge computing, which involves deploying models on edge devices.

Hope this article helps you to deploy your first model into production. Thanks for reading.