- An Introduction to Self-Driving Car

- Machine Learning Algorithms and Techniques in Self-Driving Cars

- Localization for Self-Driving Cars

- Perception for Self-Driving Cars

- Hardware and Software Architecture of Self-Driving Cars

- Sensor Fusion for Self-Driving Car

- Self-Driving Car Path Prediction and Routing

- Self-Driving Car Decision-Making and Control System

- Cloud Platform for Self-Driving Cars

- Dynamic Modeling of Self-Driving Car

- Safety of Self-Driving Cars

- Testing Methods for Self-Driving System

- Operating Systems of Self-Driving Cars

- Training a YOLOv8 Model for Traffic Light Detection

- Deployment of Self-Driving Cars

Sensor Fusion for Self-Driving Car | Self Driving Cars

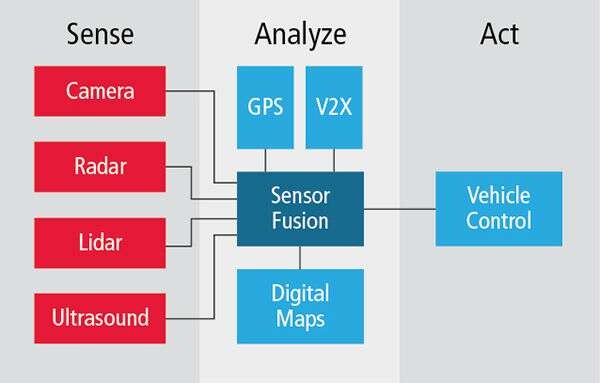

In order to ‘see' the environment around them, self-driving cars require sensors such as cameras and radar. However, sensor data alone is insufficient. Autonomous cars will also require enough computer capacity and sufficient machine intelligence to analyze various, often contradictory data streams in order to produce a single, correct image of their surroundings. It is apparent that ‘sensor fusion,' as it is known, is a necessary requirement for self-driving cars, but attaining it is a significant technological barrier. Here, we look at the various sensor technologies, why sensor fusion is important, and how edge AI technology supports it all.

SENSORS IN AUTONOMOUS VEHICLES

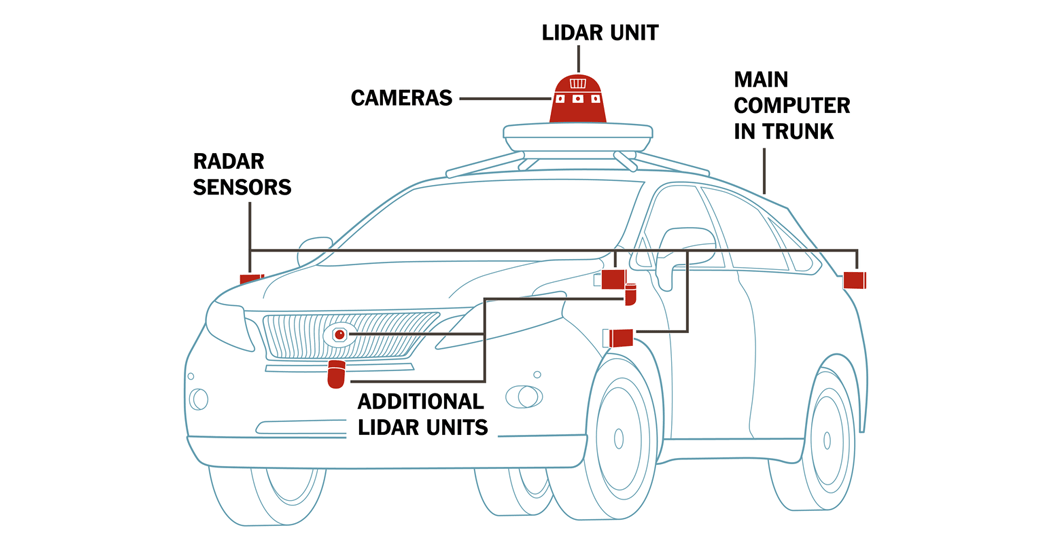

Sensors are important components of autonomous cars. Cameras, lidar, radar, sonar, a global positioning system (GPS), an inertial measurement unit (IMU), and wheel odometry are examples of sensors that can be used in autonomous vehicles. Sensors in automobiles collect data that is processed by the autonomous vehicle's computer and used to manage the vehicle's steering, braking, and speed. In addition to the automotive sensors, the information from cloud-based environmental maps and data from other automobiles are used to make vehicle management choices.

SENSOR FUSION

Sensor fusion is a method of integrating data from many sources to produce a cohesive piece of information. When various sources are combined, the information obtained is more reliable than if they were utilized alone. This is especially true when multiple types of data are mixed together. For example, a camera is required on an autonomous vehicle in order to clone human vision, while information on obstacle distance is better obtained by sensors such as lidar or radar. As a result, sensor fusion combining camera data with lidar or radar data is critical, as the two are complementary. Combining lidar and radar data, on the other hand, will offer more precise information about the distance of the obstacles ahead of the vehicle or the distance of objects in the surroundings.

Sensor Fusion for 3D object detection

The lidar is being used more often in the development of autonomous cars. Sensor fusion using camera and lidar data provides the best option in terms of system hardware complexity, as just two types of sensors are used, and the system coverage is ideal, as the camera for vision and the lidar for obstacle detection complement each other. The picture data is combined with 3D point cloud data, and the 3D box hypothesis and its confidence are projected as a consequence. The PointFusion network is one of the unique solutions to this challenge.

Sensor fusion for grid mapping of occupancy

Sensor fusion is utilized for navigation and localization of autonomous cars in dynamic settings, with the camera providing high-level 2D information such as color, intensity, density, and edge information, and the lidar providing 3D point cloud data. When it comes to occupancy grid mapping, the standard method is to filter each grid cell separately. The latest tendency, however, is to apply super-pixels to the grid map, in which grid cells occupied by obstacles are not omitted.

Sensor Fusion for Detection and Tracking of Moving Objects

A novel method in this field detects objects using radar and lidar, then sends regions of interest from the lidar point clouds to a camera-based classifier, which then fuses all of the data. The tracking module receives information from the fusion module in order to create a list of moving items. The perceived model of the environment is improved by incorporating object categorization from numerous sensor detections.

Data filtering and calibration for Sensor Fusion

Due to the limits of each sensor, no single sensor can provide reliable and precise results on its own. How does a self-driving car use all of this data after creating a multi-sensor suite? This merging is frequently combined with the Kalman filter. To build together a probabilistic understanding of the environment, Kalman filters depends on probability and a "measurement-update" cycle.

Consider this scenario: the radar indicates that the vehicle ahead of the automobile is traveling at 20 mph, while the lidar indicates that it is traveling at 23 mph. The system uses current readings, as well as measurements from the past, to calculate the speed of the car ahead of us.

To fuse sensor data together, "filters" are introduced. and produce a more precise and reliable outcome.

CONCLUSION

The sensor and sensor fusion system's dependability plays a critical part in this process. Not only must the cars have cameras that clone human vision, but they must also have sensors such as radar and lidar to detect obstacles and map the surroundings, and they must all work together to be totally autonomous.

Thank you for reading this article. I hope that gave you a quick overview of self-driving car sensor fusion techniques. Feel free to leave a comment below if you have any questions or suggestions about the post. Your feedback is always valuable to us.