- An Introduction to Self-Driving Car

- Machine Learning Algorithms and Techniques in Self-Driving Cars

- Localization for Self-Driving Cars

- Perception for Self-Driving Cars

- Hardware and Software Architecture of Self-Driving Cars

- Sensor Fusion for Self-Driving Car

- Self-Driving Car Path Prediction and Routing

- Self-Driving Car Decision-Making and Control System

- Cloud Platform for Self-Driving Cars

- Dynamic Modeling of Self-Driving Car

- Safety of Self-Driving Cars

- Testing Methods for Self-Driving System

- Operating Systems of Self-Driving Cars

- Training a YOLOv8 Model for Traffic Light Detection

- Deployment of Self-Driving Cars

Hardware and Software Architecture of Self-Driving Cars | Self Driving Cars

Engineers are considering using more sophisticated sensors and technology for software and hardware technology in self-driving cars to make it better day by day. Video cameras, radar, ultrasonic sensors, and lidar have all been utilized in the conventional concept for autonomous automobiles up until now. Each subsystem no longer does its own work in isolation from the others. Autonomous vehicles must be able to complete a large list of jobs in a variety of situations, with the output of one task serving as relevant data for the next. Whenever hardware and software work together by utilizing their best resources to perform a task we get the best result from it. Here, we are going to discuss the most common hardware and software architectural structures of self-driving cars.

1. Hardware architecture

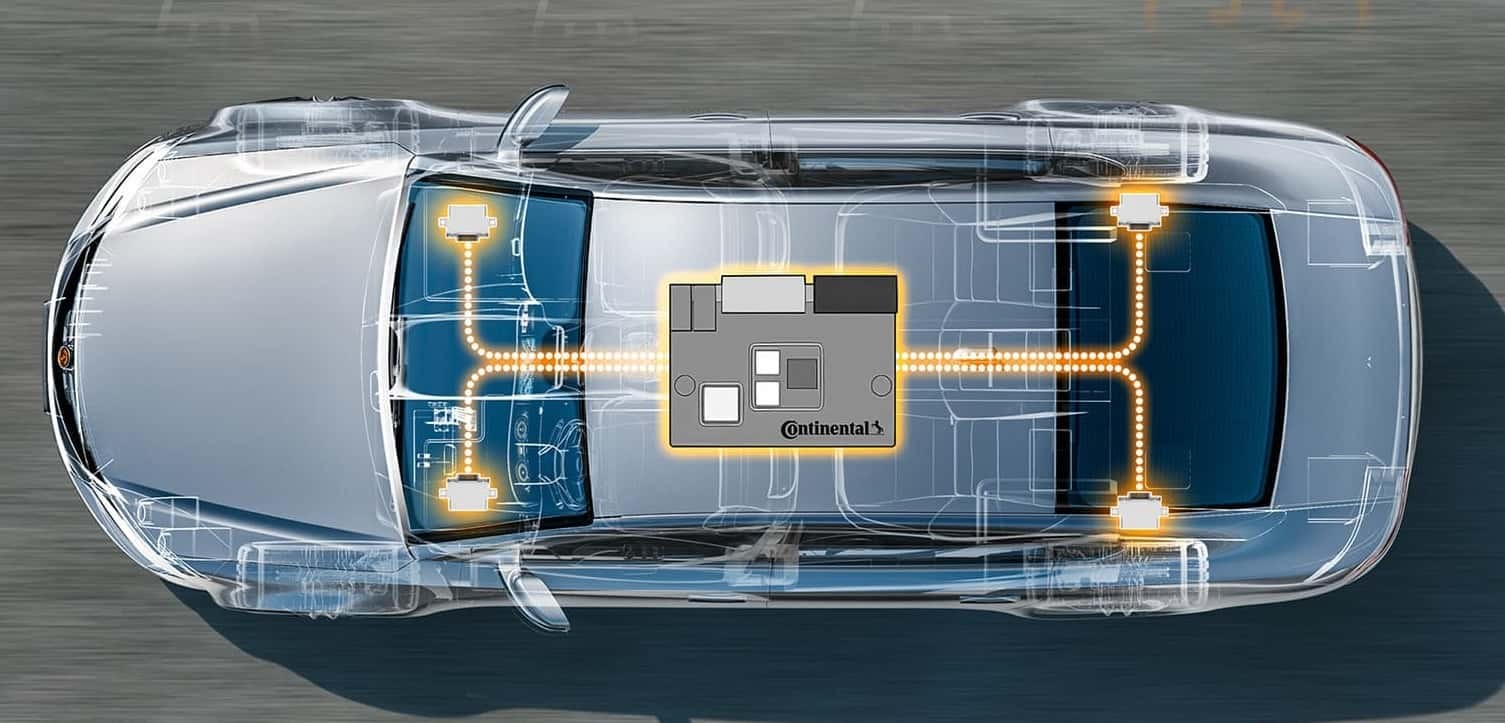

Hardware components play a vital role to enhance the redundancy and safety of self-driving systems. Self-driving tasks are performed using a combination of these hardware components. The goal of driving assistance systems is to improve the safety of the vehicle's occupants as well as the safety of the surrounding environment, while also enhancing the driver's comfort.

Environment sensor configuration

For safety, self-driving cars must be equipped with a redundant sensor; they cannot rely on a single type of sensor. Camera, Radar, Lidar, GPS is most commonly used sensor for a self-driving car. Besides, 16-line laser radar, single-line laser radars, millimeter-wave radars, cameras, and a GPS unit make up a common sensor setup for self-driving cars.

Vehicle-mounted computing platform

The computing platform for self-driving cars is made up of two industrial personal computers (IPCs). The IPC is needed to function in extreme temperature and vibration settings for an extended period of time in order to meet automotive gauge standards. The primary control IPC is utilized, whereas the hot standby IPC is employed. Both of them function in real-time online. The primary control system's output command takes precedence ordinarily. When the primary control IPC fails, the standby IPC output command is immediately switched to guarantee safety.

Sensors and actuators

Sensors and actuators are two of the most important components of self-driving cars. Their existence is critical in avoiding the need for human engagement while driving. To collect data from the environment, dozens of sensors will be required. The different actuators will be activated by sensors, which will create the command to activate the final component.

2. Software architecture

Environmental observation, scene identification, decision control, human-computer interface, and public service support are all part of the intelligent vehicle's software architecture. The most crucial aspect of self-driving automobiles is the interaction between modules.

Environmental perception module

Road border detection, obstacle detection, pedestrian and vehicle identification, traffic signs detection, lane detection, traffic light detection, and vehicle body state estimate are the most important tasks to drive a car autonomously. The edge detection method and the road surface texture template algorithm are mostly used in road edge detection. The support vector machine method is commonly used for obstacle identification.

Convolutional neural network algorithms are mostly used for classification and identification in pedestrian and vehicle detection. In order to identify traffic signs, a rapid convolution neural network technique is used. The Hough line detection method is mostly used in lane detection. For classification and detection, the traffic light detection method primarily uses a rapid convolution neural network technique.

Scene recognition

Scene recognition primarily consists of driving scenario integration, prediction of surrounding behavior, and global course planning. The primary goal of driving scenario fusion is to analyze data from numerous heterogeneous sensors, get increasingly precise environmental target knowledge, and perform road scene type assessment. The primary goal of surrounding behavior prediction is to forecast the future trajectory and speed of vehicles that will be driving around the intelligent vehicle. The primary goal of global route planning is to complete the selection and planning of a path from the beginning point to the destination point.

Decision making

The driving task selection's major duty is to choose the intelligent vehicle's driving behavior, which includes straight driving, lane shifting, overtaking, turning left at the junction, turning right at the intersection, beginning and halting at intersections, and turning at intersections. The primary goal of local route planning is to create a viable path for each chosen driving behavior, which is generally between 10 and 50 meters long. The primary function of lateral motion control is to track the intended track laterally and output the steering wheel angle.

Human-machine interaction

Programmer debugging, driver interface, and remote control intervention are all discussed in this section. One of the most essential uses is for self-driving cars is emergency response. Lane-keeping, lane changing, overtaking, and emergency collision avoidance are the driving scene of self-driving cars. Intelligent vehicle's self-driving ability in different modes is judged based on the hardware and software conditions of the intelligent vehicle itself.

Public service support

Virtual switching, process monitoring, and logging services all are part of public service support. Logically, the virtual switch is made up of several virtual buses. Several software modules are linked to each bus. The subscription and release technique is used to communicate across modules on the same bus. The virtual switch removes the module's communication function, allowing it to focus on the process. According to the time sequence, the log records the message on the virtual bus to the database. Also, according to the time sequence, the message may be delivered back to the application layer module that requires debugging, restoring the driving scenario observed during the test. Process monitoring collects and analyzes heartbeat data. When exceptions are discovered, the system quickly applies countermeasures and alerts the debugging team to inspect the system.

Conclusion

To achieve the goal of the self-driving system, well combination of software and hardware is very essential. Continuous updates on software and hardware are coming day by day. Softwares are becoming more accurate and precise and hardware components are producing more accurate data.

Thank you for reading the article. Hope now you have a clear understanding of the hardware and software system architecture and road scenario verification of self-driving cars. If you have any queries or suggestions regarding the article, you can feel free to comment below. Your comments are always important to us.