- Getting Started with Generative Artificial Intelligence

- Mastering Image Generation Techniques with Generative Models

- Generating Art with Neural Style Transfer

- Exploring Deep Dream and Neural Style Transfer

- A Guide to 3D Object Generation with Generative Models

- Text Generation with Generative Models

- Language Generation Models for Prompt Generation

- Chatbots with Generative AI Models

- Music Generation with Generative Models

- A Beginner’s Guide to Generative Design

- Video Generation with Generative Models

- Anime Generation with Generative Models

- Generative Adversarial Networks (GANs)

- Generative modeling using Variational AutoEncoders

- Reinforcement Learning for Generation

- Interactive Generative Systems

- Fashion Generation with Generative Models

- Story Generation with Generative Models

- Character Generation with Generative AI

- Generative Modeling for Simulation

- Data Augmentation Techniques with Generative Models

- Future Directions in Generative AI

Exploring Deep Dream and Neural Style Transfer | Generative AI

Introduction

Deep Dream and Neural Style Transfer are two innovative AI techniques that manipulate images to unleash creative potential. which uses neural networks to make unique images. Neural Style Transfer, on the other hand, adds artistic styles to existing images, making it possible to recreate artworks or unique visual styles.

Importance of Deep Dream and Neural Style Transfer

Deep Dream and Neural Style Transfer are big steps forward in generative AI. They give artists, scientists, and people who work in technology strong new tools for making things. Deep Dream shows patterns in neural networks, and Neural Style Transfer adds artistic styles to images, which makes people more creative. These methods can be used in art, education, science visualization, and business, but they raise ethical questions.

Let's dive into these Deep Dream & Neural styles

DeepDream is an interesting generative AI method that was created by engineers at Google. By improving and increasing patterns found by neural networks, it changes ordinary images into dreamlike, strange areas. This new way of doing things gives us an interesting look into how AI views the world, and it has inspired artists to push the limits of machine-generated art.

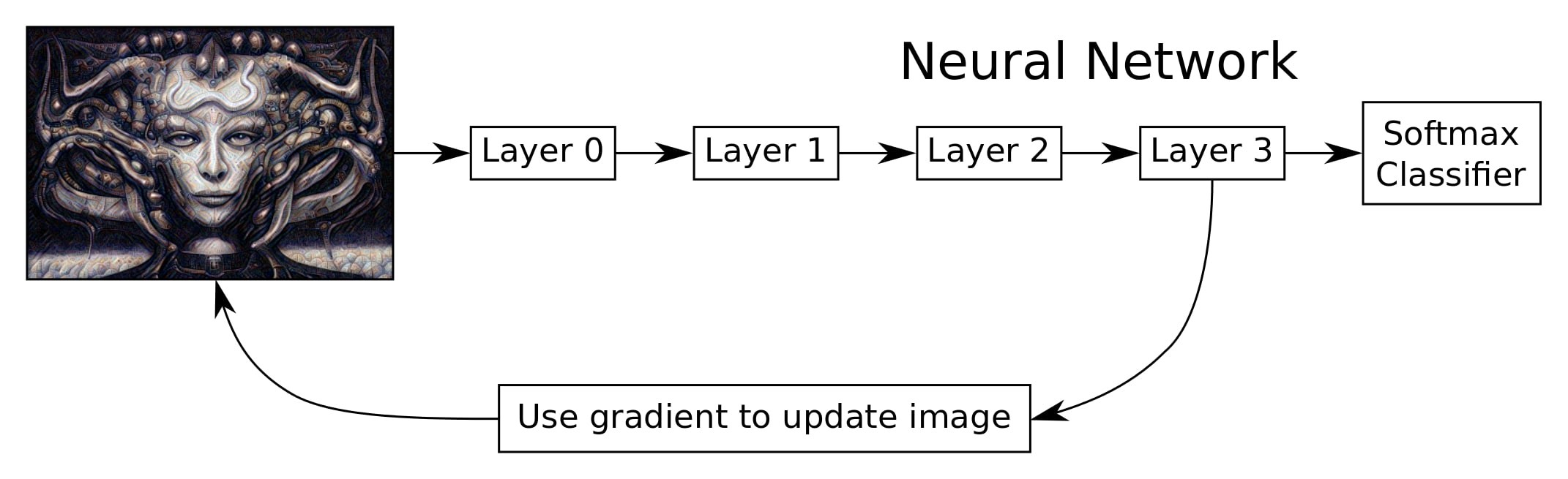

Flowchart of Deepdream:

DeepDream utilizes TensorFlow and the Inception model to enhance patterns in images. By iteratively updating the image based on gradients derived from the network, DeepDream amplifies these patterns until the desired results are achieved. Techniques like blurring gradients and tiling enable the algorithm to handle high-resolution images efficiently.

Implementation of Deep Dream

Let's go through a simple code to understand things better:

Step 1: Importing the Necessary Librariesimport osfrom io import BytesIO

import numpy as np

import PIL.Image

from IPython.display import clear_output, Image, display, HTML

import tensorflow.compat.v1 as tf

import matplotlib.pyplot as plt

import ipywidgets as widgets

from functools import partial

import ipywidgets as widgets

Step 2: Unzipping the Model File

!wget

https://storage.googleapis.com/download.tensorflow.org/models/inception5h.zip

&& unzip inception5h.zip

TensorFlow Inception Model Initialization

The code section sets up the Inception model in TensorFlow, parses the model file, creates a graph definition, defines input data placeholders, and imports the graph definition.

model = 'tensorflow_inception_graph.pb'graph = tf.Graph()

tf_session = tf.compat.v1.InteractiveSession(graph=graph)

with tf.io.gfile.GFile(model, 'rb') as f:

graph_definition = tf.compat.v1.GraphDef()

graph_definition.ParseFromString(f.read())

tensor_input = tf.compat.v1.placeholder(np.float32, name='input')

imagenet_mean = 200.0

tensor_preprocessed = tf.expand_dims(tensor_input - imagenet_mean, 0)

tf.compat.v1.import_graph_def(graph_definition, {'input': tensor_preprocessed})

Extracting Layer and Feature Channel Information from TensorFlow Inception Model

The code iterates through TensorFlow graph operations, identifying Conv2D operations with 'import/' names, collecting layers and feature channels, and calculating the total number of layers and feature channels.

layers = []feature_numbers = []

for op in graph.get_operations():

if op.type == 'Conv2D' and 'import/' in op.name:

layers.append(op.name)

feature_numbers.append(int(graph.get_tensor_by_name(op.name + ':0').shape[-1]))

print('Total number of layers:', str(len(layers))+"\n")

print('Total number of feature channels:', sum(feature_numbers))

Extracting Layer Name from Graph Operation

This code section extracts the layer name from a graph operation and processes it to obtain a cleaned layer name.

layer = layers[46]print(layer + "\n")

layer = "/".join(layer.split("/")[1:-1])

print(layer)

Extracting Tensor from a Layer in the Inception Model

This code section retrieves a specific tensor (layer) from the pre-trained Inception model.

Tensor(layer)

Initialization of Layer Name, Channel, and Noisy Image

The provided variables specify a target layer and channel within a neural network for the DeepDream algorithm, applied to a randomly generated noisy image.

layer_name= 'mixed4d_3x3_bottleneck_pre_relu'channel = 139

noisy_image = np.random.uniform(size=(224,224,3)) + 100.0

Step 3: Naive DeepDream Image Generation

Image Visualization and Normalization Functions

This code section includes two functions for displaying images and normalizing image ranges for visualization purposes.

def show_image(image_array, fmt='jpeg'):image_array = np.clip(image_array, 0, 1)

image_array = (image_array * 255).astype(np.uint8)

img = PIL.Image.fromarray(image_array)

f = BytesIO()

img.save(f, format=fmt)

display(Image(data=f.getvalue()))

def visual_normalization(image_array, scaling_factor=0.1):

image_array_mean = np.mean(image_array)

image_array_std = np.std(image_array)

max_std = max(image_array_std, 1e-4)

normalized = (image_array - image_array_mean) / max_std * scaling_factor + 0.5

return normalized

Render Naive DeepDream Image

This code section defines a function render_naive that generates a DeepDream image using a naive optimization approach.

def render_naive(target_tensor, input_image=noisy_image, iter_n=20, step=1.0):t_score = tf.reduce_mean(target_tensor)t_grad = tf.gradients(t_score, tensor_input)[0]

input_image = input_image.copy()

show_image(visual_normalization(input_image))

for i in range(iter_n):

gradients, score = tf_session.run([t_grad, t_score], {tensor_input: input_image})

gradients /= gradients.std() + 1e-8

input_image += gradients * step

clear_output()

show_image(visual_normalization(input_image))

render_naive(Tensor(layer_name)[:,:,:,channel])

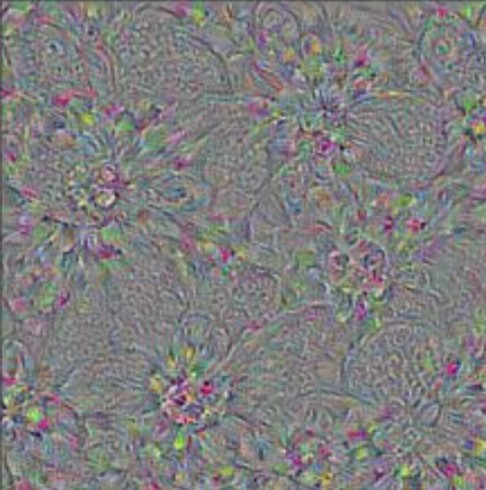

Output:

Step 4: Multi-scale DeepDream Image Generation

TensorFlow Function Wrapper with Session Execution

The code section introduces tffunc, a function wrapper that takes care of graph generation, placeholder creation, and session initialization automatically, thereby streamlining the creation and execution of TensorFlow functions.

def tensorflow_function(*argtypes):def wrap(f):

graph = tf.Graph()

with graph.as_default():

placeholders = [tf.placeholder(argtype) for argtype in argtypes]

outputs = f(*placeholders)

session = tf.Session()

session.run(tf.global_variables_initializer())

def wrapper(*args, **kwargs):

feed_dict = {placeholder: arg for placeholder, arg in zip(placeholders, args)}

return session.run(outputs, feed_dict=feed_dict)

return wrapper

return wrap

Image Resize Function using TensorFlow

The code section outlines a function called resize that uses TensorFlow to resize an input image tensor, expanding it, and adjusting its shape.

def resize(image, size):image = tf.expand_dims(image, 0)

image.set_shape([1, None, None, None])

return tf.image.resize(image, size, method=tf.image.ResizeMethod.BILINEAR)[0,:,:,:]

resize = tensorflow_function(np.float32, np.int32)(resize)

Tiled Gradient Calculation for Image with Target Gradient

The code calculates the gradient of an image using a tiled approach, dividing the image into tiles, computing each tile's gradient using TensorFlow, and combining them.

def calculate_gradient_tiled(image, target_gradient, tile_size=512):sz = tile_size

h, w = image.shape[:2]

sx, sy = np.random.randint(sz, size=2)

img_shift = np.roll(np.roll(image, sx, axis=1), sy, axis=0)

gradient = np.zeros_like(image)

for y in range(0, max(h - sz // 2, sz), sz):

for x in range(0, max(w - sz // 2, sz), sz):

sub = img_shift[y:y + sz, x:x + sz]

g = tf_session.run(target_gradient, {tensor_input: sub})

norm_factor = np.sqrt(np.mean(np.square(g))) + 1e-8

g /= norm_factor

gradient[y:y + sz, x:x + sz] = g

gradient = np.roll(np.roll(gradient, -sx, axis=1), -sy, axis=0)

return gradient

Multi-scale DeepDream Rendering

The render_multiscale function enhances DeepDream rendering by applying gradient ascent to a specified target layer and channel, scaling the image in multiple octaves.

def render_multiscale(target_tensor, input_image=noisy_image, iter_n=10, step=1.0, octave_n=3, octave_scale=1.4):t_score = tf.reduce_mean(target_tensor)

t_grad = tf.gradients(t_score, tensor_input)[0]

input_image = input_image.copy()

for octave in range(octave_n):

if octave > 0:

hw = np.float32(input_image.shape[:2]) * octave_scale

input_image = resize(input_image, np.int32(hw))

for i in range(iter_n):

gradients = calculate_gradient_tiled(input_image, t_grad)

gradients /= (np.std(gradients) + 1e-8)

input_image += gradients * step

print('.', end=' ')

clear_output()

show_image(visual_normalization(input_image))

render_multiscale(Tensor(layer_name)[:,:,:,channel])

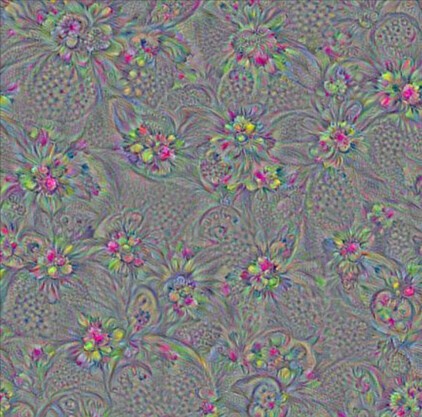

Output:

Step 5: Laplacian Pyramid Gradient Normalized Image Generation

Image Splitting into Low and High Frequency Components

The code section outlines a function called laplacian_splitting, which splits an image into low and high frequencies using a convolution operation with a specific kernel.

def laplacian_splitting(image):kernel = np.float32([1, 4, 6, 4, 1])

kernel = np.outer(kernel, kernel)

kernel = kernel[:, :, None, None] / np.sum(kernel) * np.eye(3, dtype=np.float32)

with tf.name_scope('split'):

lo = tf.nn.conv2d(image, kernel, [1, 2, 2, 1], 'SAME')

lo2 = tf.nn.conv2d_transpose(lo, kernel * 4, tf.shape(image), [1, 2, 2, 1])

hi = image - lo2

return lo, hi

Laplacian Pyramid Construction with N Splits

The code section defines a function called laplacian_splitting_n, which creates a Laplacian pyramid with n splits, a multi-scale representation of an image.

def laplacian_splitting_n(image, n):levels = []

for _ in range(n):

image, hi = laplacian_splitting(image)

levels.append(hi)

levels.append(image)

return levels[::-1]

Laplacian Pyramid Merge

def laplacian_merge(levels):image = levels[0]

kernel = np.float32([1, 4, 6, 4, 1])

kernel = np.outer(kernel, kernel)

kernel = kernel[:, :, None, None] / np.sum(kernel) * np.eye(3, dtype=np.float32)

for hi in levels[1:]:

with tf.name_scope('merge'):

image = tf.nn.conv2d_transpose(image, kernel * 4, tf.shape(hi), [1, 2, 2, 1]) + hi

return image

Image Standardization Function

The normalize_std function normalizes an image by making its standard deviation equal to 1.0. It takes an image tensor as input and calculates the standard deviation of the image pixels.

def normalize_std(image, eps=1e-10):with tf.name_scope('normalize'):

std = tf.sqrt(tf.reduce_mean(tf.square(image)))

return image / tf.maximum(std, eps)

Laplacian Pyramid Normalization

This code section demonstrates Laplacian normalization in TensorFlow, creating a new graph, defining a placeholder tensor for Laplacian input, and applying the laplacian_normalize function.

def laplacian_normalize(image, scale_n=4):image = tf.expand_dims(image, 0)

tlevels = laplacian_splitting_n(image, scale_n)

tlevels = list(map(normalize_std, tlevels))

out = laplacian_merge(tlevels)

return out[0, :, :, :]

with tf.Graph().as_default():

laplacian_input = tf.placeholder(np.float32, name='laplacian_input')

Image Rendering with Laplacian Normalization

This code section defines a function render_lapnorm that performs image rendering using Laplacian normalization for optimization.

def render_laplacian_normalization(target_tensor, input_image= noisy_image, visual_normalization=visual_normalization,iter_n=10, step=1.0, octave_n=4, octave_scale=1.4, lap_n=4):

t_score = tf.reduce_mean(target_tensor)

t_grad = tf.gradients(t_score, tensor_input)[0]

lap_norm_func = tensorflow_function(np.float32)(partial(laplacian_normalize, scale_n=lap_n))

input_image = input_image.copy()

for octave in range(octave_n):

if octave>0:

hw = np.float32(input_image.shape[:2])*octave_scale

input_image = resize(input_image, np.int32(hw))

for i in range(iter_n):

g = calculate_gradient_tiled(input_image, t_grad)

g = lap_norm_func(g)

input_image += g*step

print('.', end = ' ')

clear_output()

show_image(visual_normalization(input_image))

Playing with feature visualizations

render_laplacian_normalization(Tensor(layer_name)[:,:,:,channel])

Output:

Step 6: DeepDream Algorithm Implementation

The code section uses the DeepDream image rendering algorithm to create visually stunning images by optimizing neural network activations and enhancing patterns and features iteratively.

def deepdream(target_tensor, image= noisy_image,iter_n=8, step=2.0, octave_n=7, octave_scale=1.15):

t_score = tf.reduce_mean(target_tensor)

t_grad = tf.gradients(t_score, tensor_input)[0]

octaves = []

for i in range(octave_n-1):

hw = image.shape[:2]

lo = resize(image, np.int32(np.float32(hw)/octave_scale))

hi = image-resize(lo, hw)

image = lo

octaves.append(hi)

for octave in range(octave_n):

if octave>0:

hi = octaves[-octave]

image = resize(image, hi.shape[:2])+hi

for i in range(iter_n):

g = calculate_gradient_tiled(image, t_grad)

image += g*(step / (np.abs(g).mean()+1e-7))

print('.',end = ' ')

#clear_output()

show_image(image/255.0)

Step 7: Image Loading and Display

image = PIL.Image.open('/content/flower.jpg')

image = np.float32(image)

show_image(image/255.0)Show input Image:

Style Selection Dropdown for Image Styling

style_options = {'Select a Style': "",

'Style 1': Tensor(layer_name)[:,:,:,0]+Tensor(layer_name)[:,:,:,139]+Tensor(layer_name)[:,:,:,115],

'Style 2': Tensor(layer_name)[:,:,:,1]+Tensor(layer_name)[:,:,:,139],

'Style 3': Tensor(layer_name)[:,:,:,65],

'Style 4': Tensor(layer_name)[:,:,:,67]+Tensor(layer_name)[:,:,:,68]+Tensor(layer_name)[:,:,:,139],

'Style 5': Tensor(layer_name)[:,:,:,68],

'Style 6': Tensor(layer_name)[:,:,:,70],

'Style 7': Tensor(layer_name)[:,:,:,113],

'Style 8': Tensor(layer_name)[:,:,:,114],

'Style 9': Tensor(layer_name)[:,:,:,115],

'Style 10': Tensor(layer_name)[:,:,:,117],

'Style 11': Tensor(layer_name)[:,:,:,121],

'Style 12': Tensor(layer_name)[:,:,:,129],

'Style 13': Tensor(layer_name)[:,:,:,135],

'Style 14': Tensor(layer_name)[:,:,:,137],

'Style 15': Tensor(layer_name)[:,:,:,138],

'Style 16': Tensor(layer_name)[:,:,:,139],

'Style 17': Tensor(layer_name)[:,:,:,1]+Tensor(layer_name)[:,:,:,13]

}

dropdown = widgets.Dropdown(options=style_options, description='Select Style')

def select_style(change):

global style

style = change.new

dropdown.observe(select_style, names='value')

display(dropdown)

Generate DeepDream Image

deepdream(style, image)

Generated output image:

Implementation of Neural Style Transfer

Implementation of Neural Style Transfer This has been discussed in detail in the previous tutorial. If you want to know the details and implement the coding, click the link below to learn the details. Click on this

Conclusion

Innovative AI methods like Deep Dream and Neural Style Transfer provide fresh opportunities for both creative expression and technical advancement. They facilitate the fusing of artistic styles onto images and offer insights into the workings of neural networks. Even with their creative potential, moral issues are still crucial. These techniques represent important developments in generative AI and will influence visual creativity in the future.