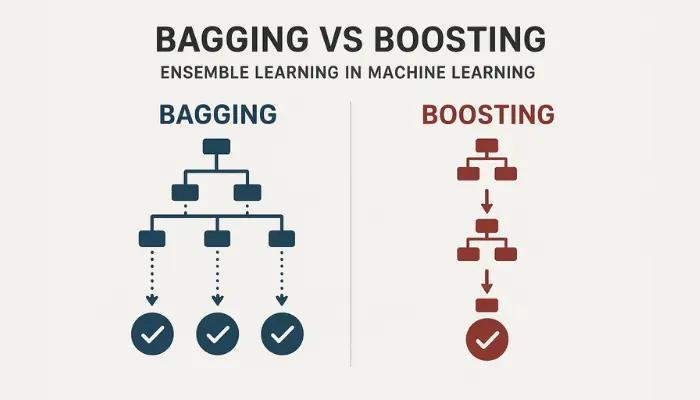

In the world of machine learning, ensemble methods have proven to be powerful techniques for improving model performance. Among these methods, two approaches stand out as the most widely used: bagging and boosting. While both techniques aim to create stronger predictive models by combining multiple classifiers, they employ fundamentally different strategies to achieve their goals. Understanding these differences is crucial for any data scientist or machine learning practitioner looking to optimize their models.

Understanding Bagging: Bootstrap Aggregating

Bagging, short for bootstrap aggregating, takes a straightforward yet effective approach to ensemble learning. The process begins with your original dataset and transforms it through a two-step methodology.

How Bagging Works

The first step involves bootstrapping the dataset. This means creating multiple sub-datasets of equal size by sampling with replacement from the original data. Each sub-dataset serves as the training ground for an individual classifier. Because sampling occurs with replacement, some data points may appear multiple times in a sub-dataset, while others might not appear at all.

The second step is aggregating the results. Once you've trained a classifier on each sub-dataset, you combine all these models into an ensemble classifier. When making predictions on new data, the ensemble considers the output from all individual models and aggregates them to produce a final prediction.

This parallel approach to model building gives bagging its name and defines its core characteristic: multiple models trained independently and simultaneously on different versions of the data.

Understanding Boosting: Sequential Learning from Mistakes

Boosting takes a fundamentally different approach to ensemble learning. Rather than training models in parallel, boosting builds models sequentially, with each new model learning from the mistakes of its predecessors.

How Boosting Works

The process starts by training a classifier on the original dataset. After this initial training, the algorithm examines which samples were correctly classified and which were misclassified. This is where boosting's unique strategy comes into play.

The misclassified samples are weighted up, meaning they receive increased importance in the training of the next model. This weighting ensures that the subsequent classifier pays more attention to the difficult samples that the previous model struggled with, hopefully learning to classify them correctly.

This cycle continues iteratively: train a model, identify misclassified samples, increase their weights, train the next model, and repeat. The process continues until you reach the desired number of models. Like bagging, the final ensemble uses all these models together to make predictions on new data.

Key Similarities Between Bagging and Boosting

Despite their different approaches, bagging and boosting share some fundamental characteristics that make them both valuable ensemble learning methods.

Both techniques build an ensemble of multiple models rather than relying on a single classifier. This ensemble approach is what gives both methods their power to improve predictions beyond what individual models can achieve.

Additionally, both methods create separate datasets for training each model, though they accomplish this in different ways. Finally, both techniques make final predictions by averaging the outputs of their constituent models, though the weighting of these models differs significantly between the two approaches.

Critical Differences: Parallel vs Sequential Training

Training Architecture

The most fundamental difference between bagging and boosting lies in their training architecture. Bagging builds models in parallel, meaning all classifiers can be trained simultaneously and independently of one another. In contrast, boosting builds models sequentially, with each model dependent on the results of the previous one.

This architectural difference has significant practical implications. If you have substantial computing resources at your disposal, bagging may be the more suitable choice. Its parallel nature allows you to leverage multiple processors or machines, potentially reducing training time dramatically. Boosting, however, cannot benefit from parallelization in the same way due to its sequential dependencies, which means additional computing power may not significantly reduce training time.

Dataset Creation and Sample Weighting

Both methods create separate data for each model, but their approaches differ considerably. Bagging uses subsets of the original dataset generated through sampling with replacement, while boosting uses the same samples as in the original dataset for all models.

Another crucial distinction involves sample weighting. In bagging, all samples remain unweighted throughout the process. The random sampling provides the variation between models. In boosting, samples are dynamically weighted based on the predictions of previous classifiers, with misclassified samples receiving higher weights to focus subsequent models on difficult cases.

Prediction Methodology

When making predictions, both methods aggregate the outputs of their models, but they do so differently. In bagging, all classifiers are equally weighted in the ensemble. Each model's vote counts the same regardless of its individual performance.

Boosting takes a different approach by weighting models based on their training performance. Models that perform better during training receive more influence in the final ensemble prediction, while weaker models contribute less to the final decision.

Bias and Variance: The Performance Trade-off

Understanding how bagging and boosting affect bias and variance is essential for choosing the right method for your problem.

Variance Reduction

Both bagging and boosting excel at reducing variance, which is why they're both powerful ensemble methods. By combining multiple models, they smooth out the predictions and reduce the sensitivity to particular training samples.

Bias Considerations

The story differs when it comes to bias reduction. Bagging achieves close to zero bias reduction. This happens because the sampling process doesn't change the underlying distribution of the data. When you sample with replacement, the bias of individual models transfers directly to the ensemble.

Boosting, however, can reduce both bias and variance. By iteratively focusing on misclassified samples and adjusting sample weights from one model to another, boosting can learn more complex patterns and reduce bias more effectively than bagging.

Overfitting Risk

This bias reduction capability comes with a trade-off. Boosting's aggressive focus on difficult samples makes it more prone to overfitting compared to bagging. The sequential weighting process can cause the ensemble to become too specialized to the training data, particularly when dealing with noisy datasets or when training too many models.

Bagging, with its simpler approach of random sampling and equal weighting, tends to be more robust against overfitting, making it a safer choice when you're concerned about model generalization.

Choosing Between Bagging and Boosting

The decision between bagging and boosting depends on several factors specific to your project:

Consider bagging when:

- You have significant computing resources and want to minimize training time through parallelization

- Your primary concern is reducing variance without introducing additional complexity

- You want a more robust method that's less prone to overfitting

- You're working with noisy data that might cause boosting to overfit

Consider boosting when:

- You need to reduce both bias and variance in your model

- Training time is less critical than achieving the best possible performance

- Your dataset is clean and you're less concerned about overfitting

- You're willing to invest time in tuning hyperparameters to prevent overfitting

Conclusion

Bagging and boosting represent two powerful but distinct approaches to ensemble learning in machine learning. Bagging's parallel training, random sampling, and equal weighting make it a robust and efficient choice for variance reduction. Boosting's sequential training, adaptive weighting, and focus on difficult samples enable it to reduce both bias and variance, though at the cost of increased overfitting risk and sequential training requirements.

Understanding these fundamental differences allows you to make informed decisions about which ensemble method best suits your specific machine learning challenge. Both techniques have proven their value across countless applications, and mastering when and how to use each one is an essential skill for any machine learning practitioner. The key is to consider your computational resources, data characteristics, and performance requirements when making your choice.