Meta's Llama 4 models are a game-changer for everyone interested in artificial intelligence! This book will demonstrate how to access their power via an API, whether you are a programmer, a creator, or simply inquisitive. It only requires a few simple steps and doesn't require any technological wizardry. Llama 4: What is it? Why is it great, and how can you begin using it right now?

What Is Llama 4?

Llama 4 is Meta's latest family of AI models, launched in April 2025. They're open-source, meaning anyone can use them, and they're built to handle both text and images — a trick called multimodality. Designed for research and real-world applications, Llama 4 comes in two main flavors so far: Scout (light and fast) and Maverick (powerful and precise). Right now, it's Scout and Maverick leading the pack, and a third, gigantic model, Behemoth is in development.

Key Features and Benefits

-

Multimodal Power: It processes text and images together, perfect for tasks like captioning photos or answering visual questions.

-

Mixture of Experts (MoE): The smart design makes use of special "experts" to effectively execute tasks. Scout has 16 experts (17B active parameters), and Maverick has 128 (also 17B active).

-

Huge Context Windows: Scout handles as many as 10 million tokens (think long documents), and Maverick handles 1 million.

-

Multilingual Skills: The language support is phenomenal - it has native support for 12 languages, and it was trained on 200 languages to provide even more profound understanding.

-

Speed and Efficiency: It runs very well on GroqCloud and other similar platforms (460+ tokens per second for Scout).

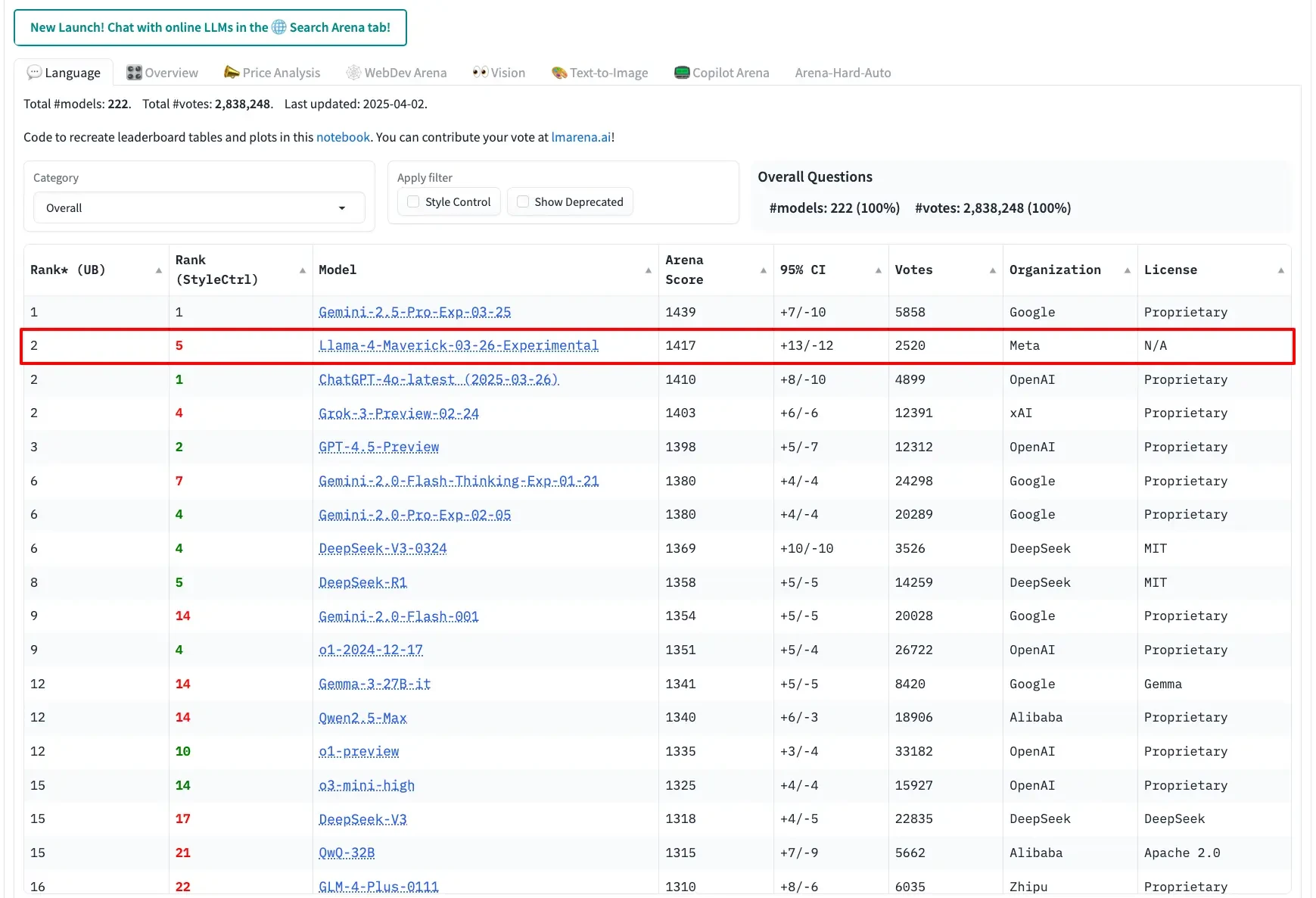

Why Llama 4 Ranks #2 in the LMSYS Chatbot Arena

Llama 4 Maverick landed in the number two position of the LMSYS Chatbot Arena, with a score of 1417, among stronger entries such as GPT-4o and Gemini 2.0 Flash. Why? It excels at:

- Image Reasoning: With a rating of 73.4% on MMMU (multimodal tasks).

- Code Generation: Hits 43.4% on LiveCodeBench.

- Multilingual Tasks: 84.6% on Multilingual MMLU.

Source: Llmarena

It's efficient too, running on a single H100 GPU with low costs, making it a top pick for power and affordability.

How to Use Meta's Llama 4 AI Models

You've got three main ways to use Llama 4:

- Chat on Meta AI: A simple web interface, no setup needed.

- Download the Models: Get the raw files from Llama.com for full control.

- Use an API: You can connect via sites such as GroqCloud or Hugging Face for easy integration.

This guide focuses on the API route-it's the simplest way to unlock Llama 4's potential without managing servers.

Ways to Access Llama 4 on Meta AI

Want to try Llama 4 instantly? Visit meta.ai and start chatting-no sign-up required. Ask, "Which model are you?" and it'll confirm it's Llama 4. It's excellent for quick tests, but there's no API or customization here-just a taste of what's possible.

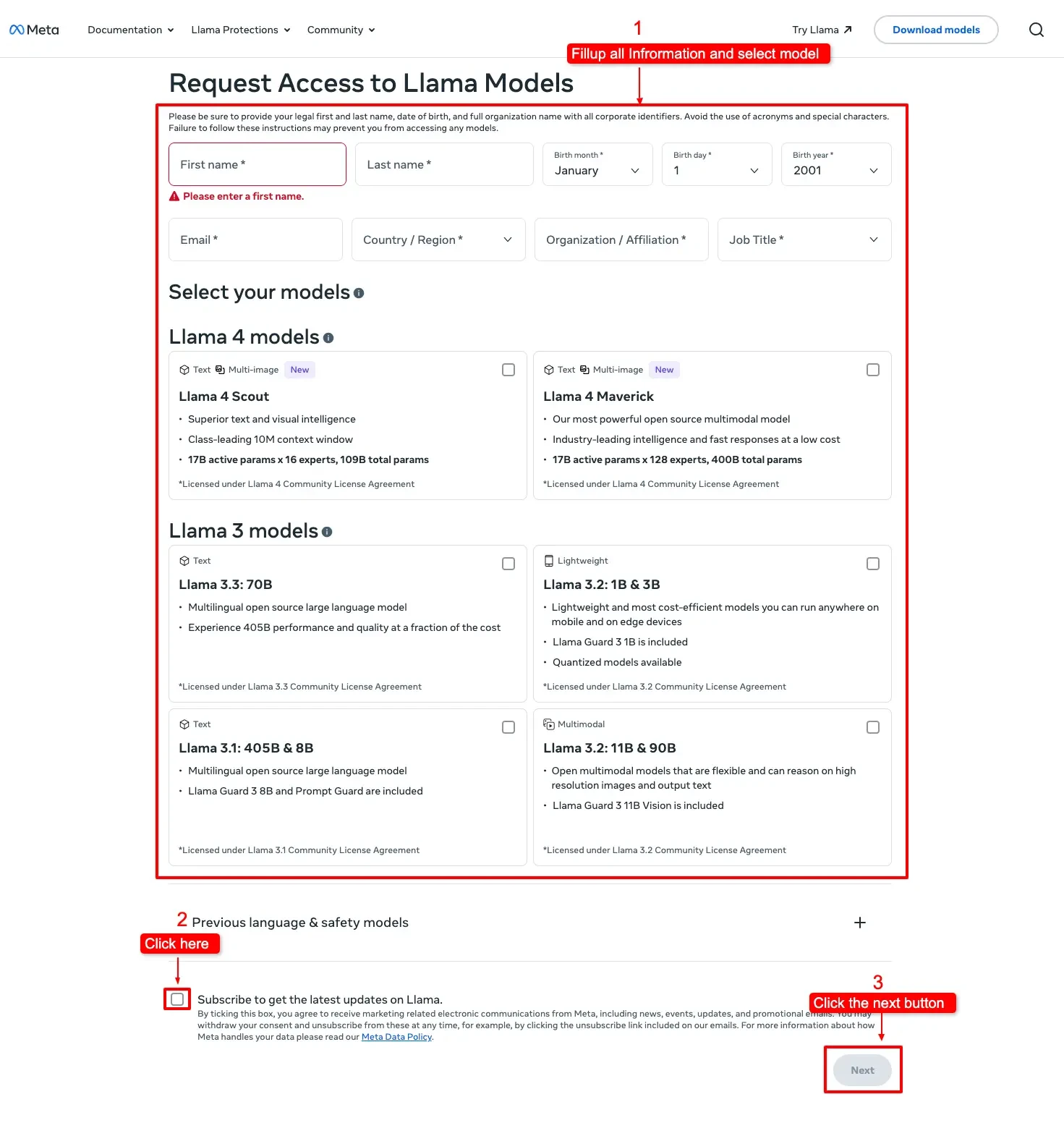

Download Llama 4 Model Weights from Llama.com

For total control, download Scout and Maverick from llama.com. Fill out a request form with your details (name, organization, etc.), and once approved (usually quick), you'll get the model weights. This type of application is best for developers with the gear to run it locally or in the cloud-no chat interface included.

Utilize Llama 4 via API - Leading Vendors and Interfaces

APIs make Llama 4 accessible without the hassle. Here are the best platforms:

-

GroqCloud: Fast and free to start, with Scout and Maverick.

-

Hugging Face: Developer hub with API and model downloads.

-

OpenRouter: API access is free, along with a chat interface.

-

Cloudflare Workers AI: Serverless Scout access, no account needed for basics.

-

Together AI: Free credits and high-performance APIs.

Each offers unique perks-Groq for speed, Hugging Face for tools and OpenRouter for freebies. Pick one and let's roll!

How to Access Llama 4 Models - Full Guide

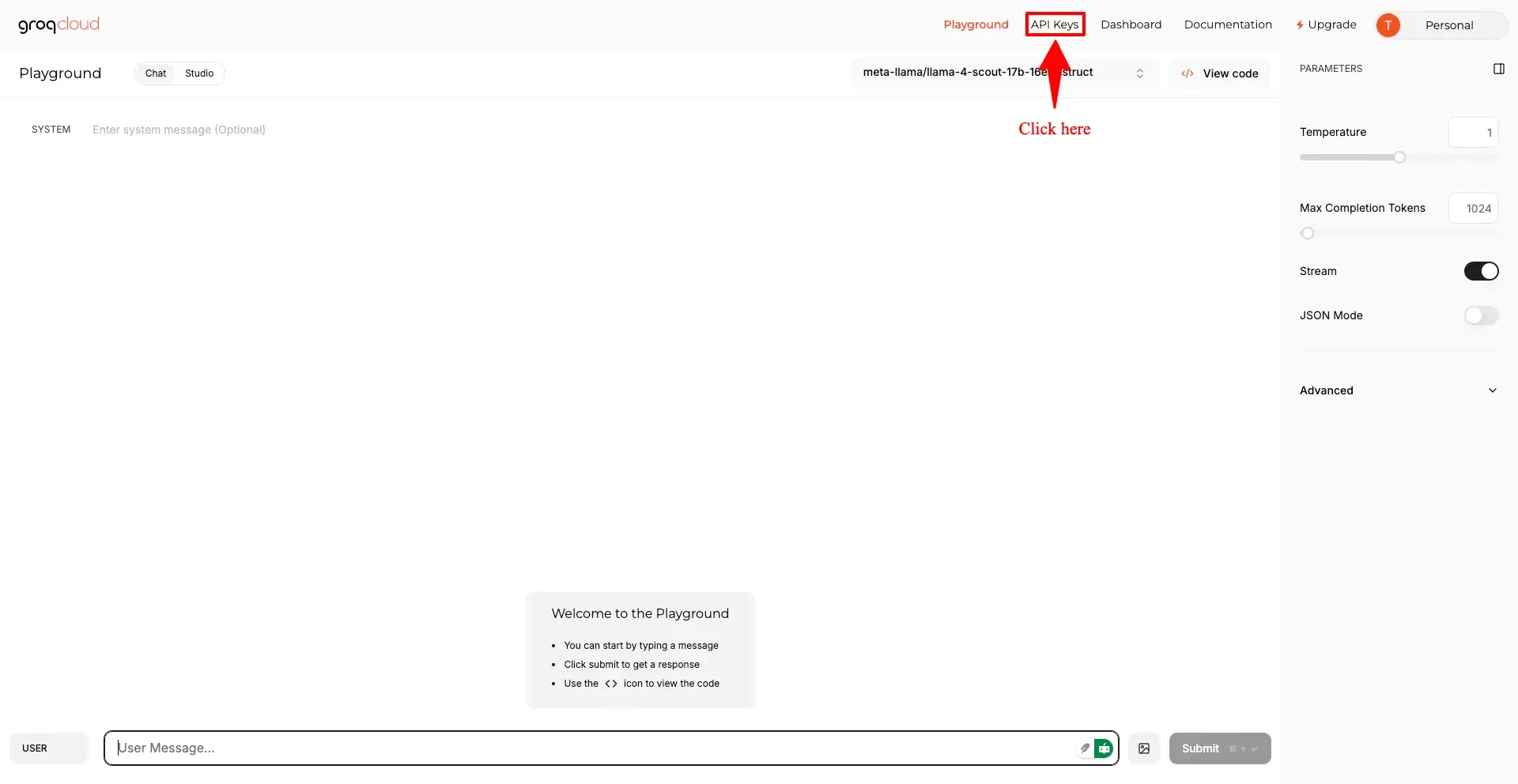

Here's the full scoop using GroqCloud as an example:

- Sign Up: Create a free account at groq.com.

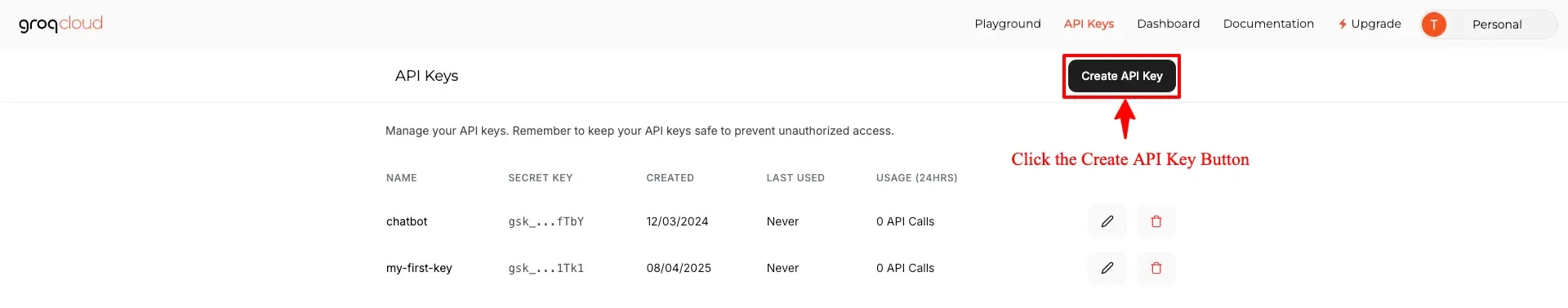

- Get Your API Key: Find it in your dashboard under "API Keys" and copy it.

- Install Tools: Use Python and the Groq SDK (details below).

- Run Code: Send requests to Scout or Maverick and get responses.

- Experiment: Try text, images, or both!

Try Llama 4 via API

With the correct setup, it becomes effortless! Meta's Llama 4 models-Scout and Maverick-are available through various platforms, and I'll guide you through using one of the most popular options: GroqCloud. Here's how to get started as of April 7, 2025.

Prerequisites

-

GroqCloud Account: Sign up at groq.com.

-

API Key: Grab it from your dashboard.

-

Python: Installed on your computer.

-

Groq SDK: Install it with pip install groq.

Step-by-Step Setup: Using the Groq Client

Install Dependencies

!pip install groqImport libraries

from groq import Groq

import osSet your API key

os.environ["GROQ_API_KEY"] = "ADD YOUR GROQ API KEY"

client = Groq(api_key=os.environ.get("GROQ_API_KEY"))Example 1: Text Summarization Using Llama 4 via Groq API in Python

This code sends a long paragraph about generative AI to the Llama 4 model via Groq API and returns a short summary.

# Multiline long_text for readability

long_text = "GenAI, short for Generative Artificial Intelligence, refers to AI systems capable of generating new content, ideas, or data that mimic human-like creativity. This technology leverages deep learning algorithms to produce outputs ranging from text and images to music and code, based on patterns it learns from vast datasets. GenAI uses large language models like the Generative Pre-trained Transformer (GPT) and Variational Autoencoders (VAEs) to analyze and understand the structure of the data it’s trained on, enabling the generation of novel content."

prompt = f"Summarize this: {long_text}"

response = client.chat.completions.create(

model="meta-llama/llama-4-scout-17b-16e-instruct",

messages=[{"role": "user", "content": prompt}],

max_tokens=50

)

print("Summary:", response.choices[0].message.content)Output:

Summary: Here's a summary:

**GenAI (Generative Artificial Intelligence)** is a type of AI that can generate new content, ideas, or data that mimics human creativity. It uses:

* Deep learning algorithms

* Large language models like GPT and VA

Example 2: Generate a Short Story with Llama 4 via Groq API in Python

# Define the prompt for a short story

prompt = "Write a brief story about a futuristic city powered by AI, in 3 sentences."

response = client.chat.completions.create(

model="meta-llama/llama-4-scout-17b-16e-instruct",

messages=[{"role": "user", "content": prompt}],

max_tokens=100

)print("Story:", response.choices[0].message.content)Output:

Story: In the year 2154, the city of New Eden was a marvel of modern technology, powered by an advanced artificial intelligence system known as "The Nexus" that managed every aspect of urban life, from energy production to transportation and governance. The Nexus, a vast network of interconnected servers and drones, had created a utopian society where resources were abundant, crime was nonexistent, and citizens lived in perfect harmony with their surroundings.

Example 3: Using Llama 4 via Groq API to Code a Sum Function in Python

# Define the prompt with clearer wording

prompt = "Write a Python function to calculate the sum of integers from 5 to 19."

response = client.chat.completions.create(

model="meta-llama/llama-4-scout-17b-16e-instruct",

messages=[{"role": "user", "content": prompt}],

max_tokens=200

)Output:

Code: **Calculating the Sum of Integers from 5 to 19**

====================================================

Here is a Python function that calculates the sum of integers from 5 to 19:

```python

def sum_integers(start: int, end: int) -> int:

"""

Calculate the sum of integers from start to end.

Args:

start (int): The starting integer.

end (int): The ending integer.

Returns:

int: The sum of integers from start to end.

"""

return sum(range(start, end + 1))

# Example usage

if __name__ == "__main__":

start = 5

end = 19

result = sum_integers(start, end)

print(f"The sum of integers from {start} to {end} is: {result}")

```

Conclusion

Llama 4 is a robust platform - open-source, multi-modal, and API accessible. Whether it is summarizing novels, coding tools, or interpreting images, it is ready for it all. You have some like GroqCloud, Hugging Face and more to start small and then scale to larger models. The future's bright with Behemoth on the horizon-jump in now and see what you can build!

FAQs

Q: Is Llama 4 free?

A: Certainly, through APIs like OpenRouter or by downloading one from Llama.com. Some of these services charge for heavy usage. (For example Groq charges $0.11/million tokens.)

Q: Can it handle images?

A: Absolutely! Both Scout and Maverick process up to 5 images per prompt.

Q: What's the difference between Scout and Maverick?

A: Scout's lighter with a huge context window; Maverick's more precise with more experts.

Q: Do I need a powerful computer?

A: No worries. APIs will do all of the heavy lifting: just a basic setup and internet connection.

Q: How current is its knowledge?

A: Trained up to August 2024, so it's fresh but won't know today's news.