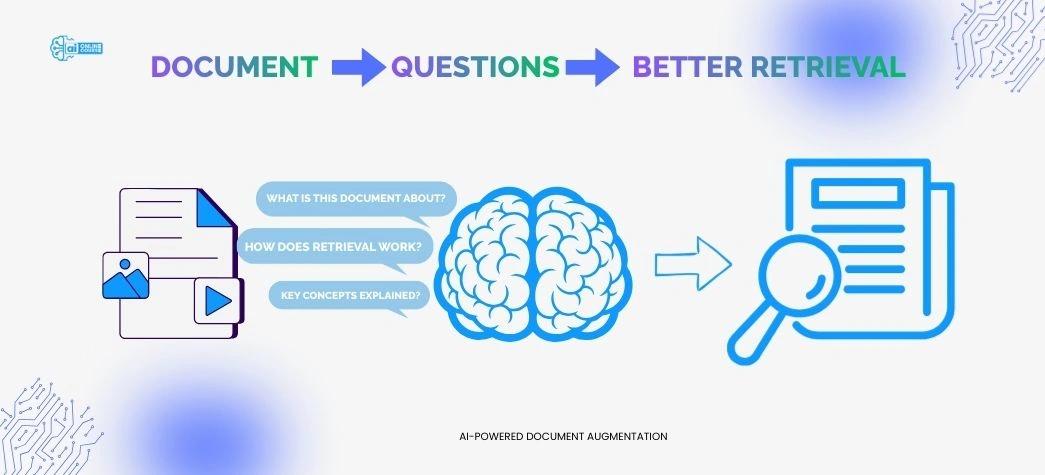

Document Augmentation through Question Generation for Enhanced Retrieval

This project focuses on document retrieval enhancement through text augmentation via question generation. The method aims to improve document search systems by generating additional questions from text content, which increases the chance of retrieving the most relevant text fragments. These fragments then serve as the context for generative question-answering tasks, using OpenAI's language models to produce answers from documents.

Project Overview

The implementation demonstrates a document augmentation technique integrating question generation to enhance document retrieval in a vector database. Generating questions from text fragments improves the accuracy of finding relevant document sections. The pipeline incorporates PDF processing, question augmentation, FAISS vector store creation and retrieval of documents for answer generation. The approach significantly enriches the retrieval process, ensuring better comprehension and more precise answers, leveraging OpenAI's models for improved question generation and semantic search.

Prerequisites

- Python 3.8+ (for compatibility with LangChain, OpenAI API and FAISS)

- Google Colab or Local Machine (for execution environment)

- OpenAI API Key (for generating embeddings and using the GPT-4o model)

- LangChain (for document processing and retrieval logic)

- FAISS (for storing and retrieving document embeddings)

- PyPDF2 (for PDF document reading and conversion to text)

- Pydantic (for data modeling and validation)

- langchain-openai (for OpenAI model integration with LangChain)

Approach

The approach of this project revolves around utilizing OpenAI’s language models to process and enhance document retrieval through question generation automatically. Initially, the content of a document, typically in PDF format, is extracted and split into smaller, manageable chunks based on token size and overlap. Each chunk is processed to generate relevant questions, either at the fragment level or document level, depending on the configuration. The generated questions are then used to augment the document fragments. FAISS is employed to create a vector store where these augmented fragments and questions are embedded for efficient similarity search. Once the documents are processed and indexed, a retriever is created to fetch the most relevant fragments in response to a user query. The retriever uses query embedding to identify similar fragments from the document store. The context of the most relevant fragment is then used to generate an accurate, concise answer to the query. This approach optimizes document retrieval by improving search relevance and ensuring the ability to provide precise answers based on document content.

Workflow and Methodology

Workflow

- Document Input: A PDF document is provided for processing.

- Document Extraction: The document content is extracted into text using PyPDF2.

- Text Splitting: The extracted text is split into smaller fragments based on specified token limits.

- Question Generation: Questions are automatically generated from the document or fragments using OpenAI’s GPT-4 model.

- Vectorization: The document fragments and generated questions are embedded using OpenAI's embeddings model.

- Indexing: FAISS is used to create a vector store that indexes the embedded fragments and questions for efficient retrieval.

- Query Handling: A user query is provided and the retriever searches for the most relevant fragments based on the query.

- Answer Generation: The context of the most relevant fragment is used to generate a precise answer using the language model.

Methodology

- Document Processing: Split the document into smaller chunks to handle large content efficiently.

- Question Generation: Use OpenAI's GPT-4 model to generate questions that are contextually relevant and answerable from the document.

- FAISS Vector Store: Embed the document fragments and questions, storing them in a FAISS vector store for fast retrieval.

- Query Embedding: The user query is embedded to identify the most relevant documents from the vector store.

- Retrieval and Answering: Retrieve the most relevant fragments from the store and generate an answer using the context of those fragments. This ensures the answer is directly tied to the content of the document.

Data Collection and Preparation

Data Collection

The PDF document used in the example is named "Climate_Change.pdf". It is located at the path:

/content/drive/MyDrive/Document Augmentation through Question Generation for Enhanced Retrieval/Climate_Change.pdf

Data Preparation Workflow

- Collect PDFs: Gather documents.

- Extract Text: Use PyPDF2 to extract text.

- Split Documents: Break text into chunks.

- Generate Questions: Use GPT-4 for question generation.

- Clean Questions: Filter and validate questions.

- Generate Embeddings: Convert to embeddings.

- Create FAISS Store: Store embeddings for search.

- Index Data: Prepare for query retrieval.

Code Explanation

Installing Required Libraries

This command installs several Python libraries. LangChain and OpenAI help work with language models, FAISS-CPU is for efficient similarity search, PyPDF2 is used for reading and manipulating PDFs and Pydantic is for data validation and settings management.

!pip install langchain openai faiss-cpu PyPDF2 pydantic

Upgrading langchain-community

This command upgrades the langchain-community library to the latest version. It ensures you have the most recent features and updates for building language model applications with community enhancements.

!pip install -U langchain-community

Installing langchain-openai

This command installs the langchain-openai library, which integrates OpenAI's models with the LangChain framework. It allows you to use OpenAI's language models for various tasks like natural language processing and conversational AI.

!pip install langchain-openai

Mounting Google Drive in Colab

This code mounts your Google Drive to the Colab environment, allowing you to access files stored in your drive. After running it, you'll be able to interact with your drive's contents directly within Colab under the /content/drive directory.

from google.colab import drive

drive.mount('/content/drive')

Setting Up OpenAI API Key and Libraries

This code sets up the necessary libraries and configurations to use OpenAI's models in a project. It imports essential modules like langchain for language processing, FAISS for vector storage and OpenAIEmbeddings for embedding generation. The script loads the OpenAI API key either from the Colab secrets or a .env file, ensuring secure access to the API. If the API key is missing, it raises an error to prompt the user to add it.

from typing import Any, Dict, List, Tuple

from langchain.docstore.document import Document

from langchain.vectorstores import FAISS

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain_openai import ChatOpenAI

from enum import Enum

import re

import os

import sys

from dotenv import load_dotenv

a

# Import necessary modules from pydantic

from pydantic import BaseModel, Field

#Import PromptTemplate

from langchain import PromptTemplate

try:

from google.colab import userdata

api_key = userdata.get("OPENAI_API_KEY")

except ImportError:

api_key = None # Not running in Colab

if not api_key:

load_dotenv()

api_key = os.getenv("OPENAI_API_KEY")

if api_key:

os.environ["OPENAI_API_KEY"] = 'ADD YOUR OPENAI_API_KEY'

else:

raise ValueError("❌ OpenAI API Key is missing! Add it to Colab Secrets or .env file.")

sys.path.append(os.path.abspath(os.path.join(os.getcwd(), '..')))

print("OPENAI_API_KEY setup completed successfully!")Configuring Question Generation and Token Limits

This code sets the level of question generation (document or fragment level) and defines token limits for documents and fragments. It also specifies the number of questions to generate per document or fragment.